I’m a passionate writer who loves exploring ideas, sharing stories, and connecting with readers through meaningful content.I’m dedicated to sharing insights and stories that make readers think, feel, and discover something new.

Introduction

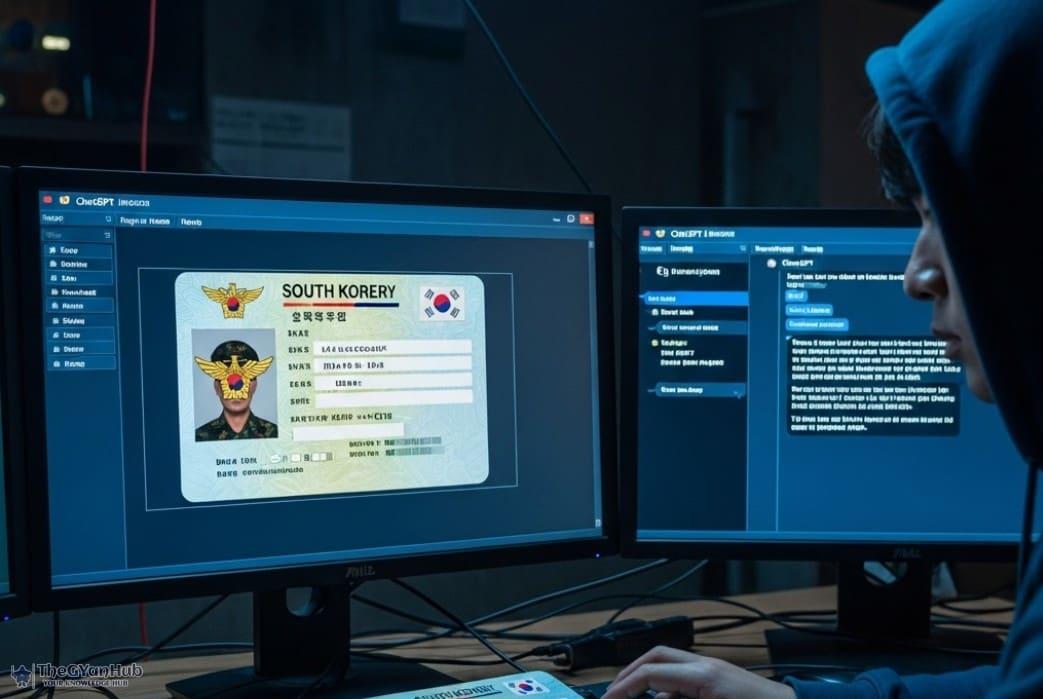

In a startling revelation, North Korean hackers have reportedly used ChatGPT, an AI language model, to fabricate fake military identification cards. This development is part of a broader cyberattack strategy targeting South Korea, raising concerns about the misuse of AI technologies in global cyber warfare.

The Role of ChatGPT in Cyber Attacks

ChatGPT, developed by OpenAI, is a powerful tool capable of generating human-like text. While its primary purpose is to assist users in various tasks, its capabilities can be exploited for malicious activities. In this case, hackers have leveraged ChatGPT to produce realistic-looking military IDs, which could be used to infiltrate secure systems or deceive personnel.

How the Attack Unfolded

The attack reportedly involved the creation of fake military IDs using ChatGPT's advanced text generation capabilities. These IDs were then used as part of a phishing campaign targeting South Korean military and government officials. The goal was to gain unauthorized access to sensitive information and disrupt operations.

Implications for Cybersecurity

This incident highlights the growing threat of AI-enhanced cyberattacks. As AI technologies become more sophisticated, so do the methods employed by cybercriminals. The use of AI in creating fake identities poses a significant challenge for cybersecurity experts, who must now develop new strategies to detect and prevent such threats.

Responses from South Korea

In response to the attack, South Korean authorities have ramped up their cybersecurity measures. They are working closely with international partners to trace the origins of the attack and prevent future incidents. Additionally, there is a push to educate personnel on recognizing and responding to phishing attempts.

International Reactions

The international community has expressed concern over the misuse of AI technologies in cyber warfare. This incident underscores the need for global cooperation in regulating AI applications and ensuring they are not used for harmful purposes. Several countries are now calling for stricter controls on AI development and deployment.

Future of AI in Cybersecurity

While AI poses significant risks, it also offers potential solutions. AI-driven tools can be used to enhance cybersecurity defenses, detect anomalies, and respond to threats in real-time. The challenge lies in balancing the benefits of AI with the need to prevent its misuse.

Conclusion

The use of ChatGPT by North Korean hackers to create fake military IDs is a wake-up call for the global cybersecurity community. It highlights the urgent need for robust defenses against AI-enhanced threats and underscores the importance of international collaboration in addressing these challenges.

Further Reading

Related articles in this category

El Mencho Killed: The Fall of Mexico's Most Powerful Drug Cartel

February 23, 2026

The recent killing of Nemesio Rubén Oseguera Cervantes, known as 'El Mencho', has led to significant upheaval in Mexico as the Jalisco New Generation Cartel faces a power vacuum. This article explores the implications of his death on the drug trade and national security.

Sam Altman vs. Sridhar Vembu: A Clash on AI and Human Energy Consumption

February 22, 2026

In a recent discussion, Sam Altman compared the energy consumption of AI systems to that of humans, prompting a strong rebuttal from Sridhar Vembu. This article explores their contrasting views on energy efficiency and sustainability.

Trump's Loss, India's Gain? How Tariff Order Could Affect Trade Talks

February 20, 2026

The US Supreme Court's decision to strike down Trump's Global Tariffs Policy may have significant implications for India, potentially reshaping trade dynamics. As New Delhi navigates this change, the global trade landscape could see a shift in power.